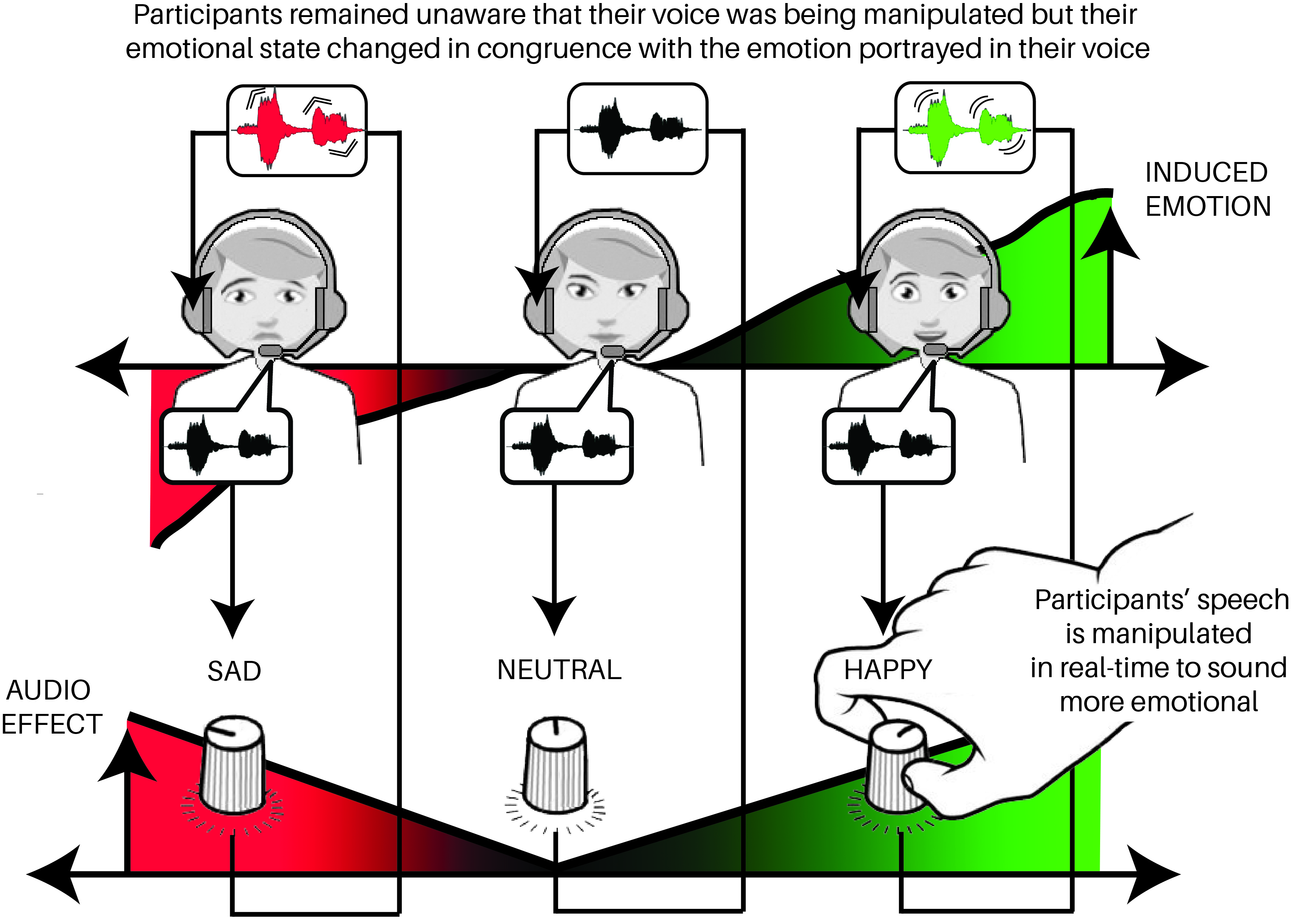

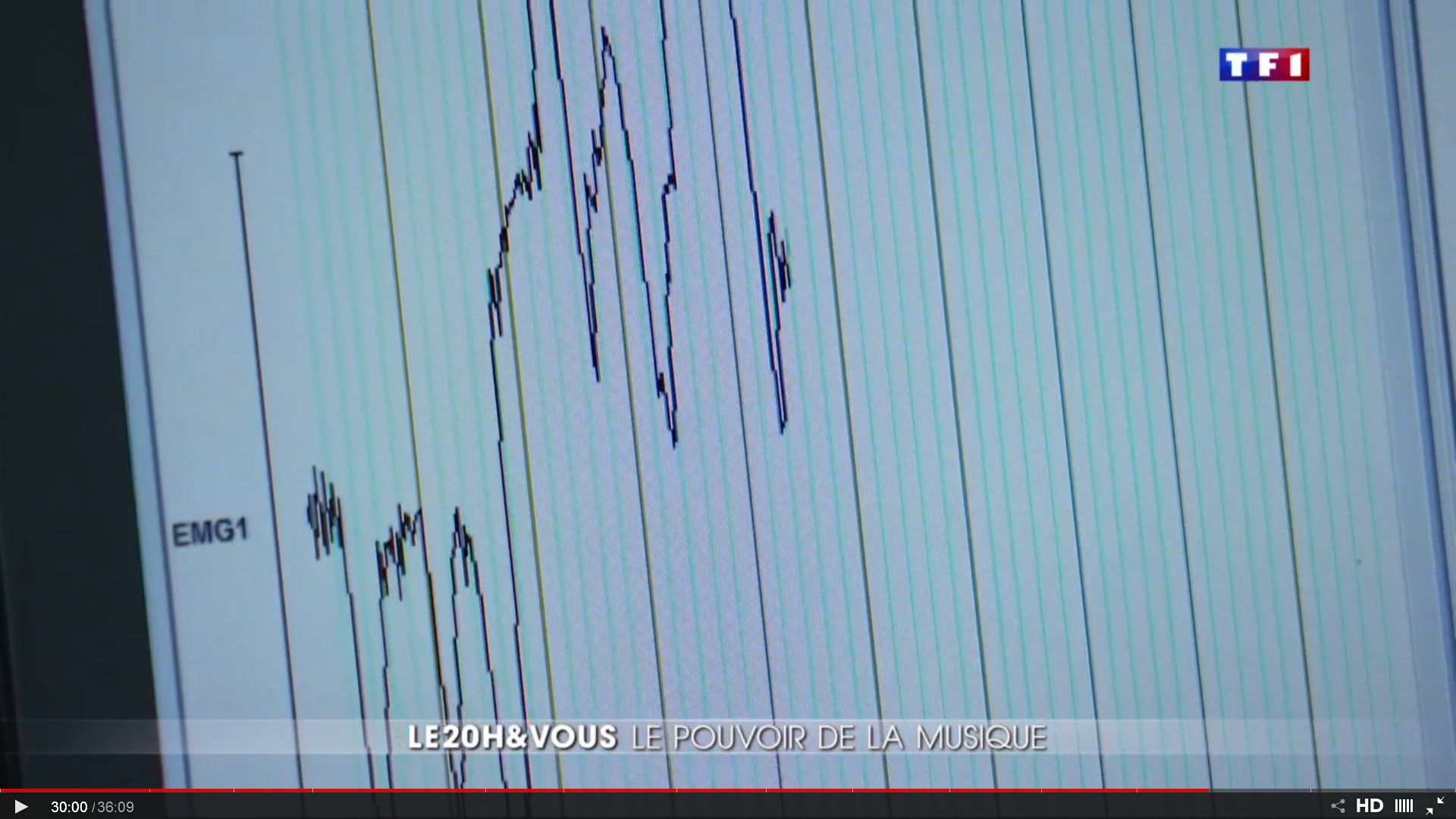

We created a digital audio platform to covertly modify the emotional tone of participants’ voices while they talked toward happiness, sadness, or fear. Independent listeners perceived the transformations as natural examples of emotional speech, but the participants remained unaware of the manipulation, indicating that we are not continuously monitoring our own emotional signals. Instead, as a consequence of listening to their altered voices, the emotional state of the participants changed in congruence with the emotion portrayed. This result is the first evidence, to our knowledge, of peripheral feedback on emotional experience in the auditory domain.

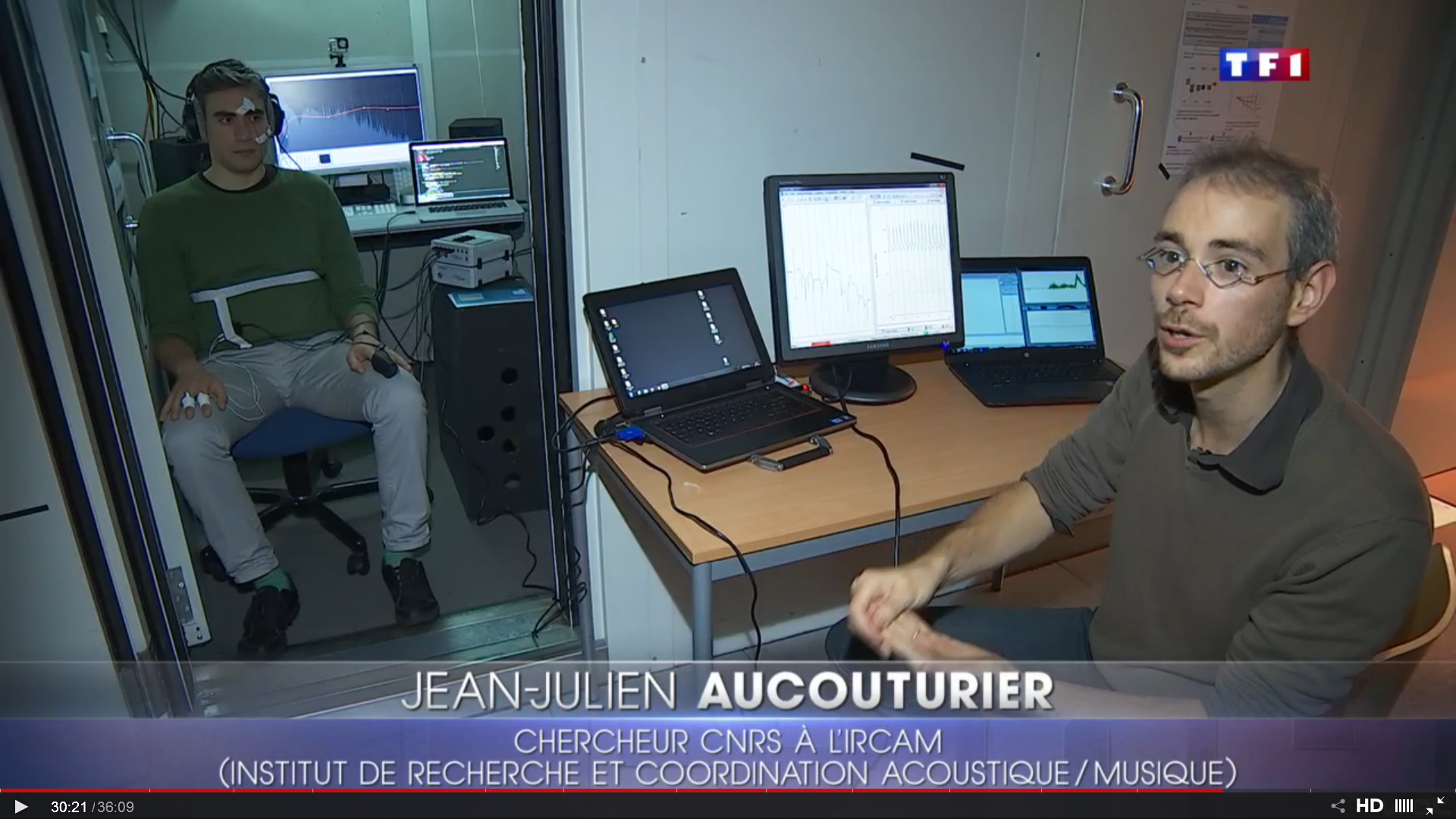

The study is a collaboration between the CREAM team in Science and Technology of Music and Sound Lab (STMS), (IRCAM/CNRS/UPMC), the LEAD Lab (CNRS/University of Burgundy) in France, Lund University in Sweden, and Waseda University and the University of Tokyo in Japan.

Aucouturier, J.J., Johansson, P., Hall, L., Segnini, R., Mercadié, L. & Watanabe, K. (2016) Covert Digital Manipulation of Vocal Emotion Alter Speakers’ Emotional State in a Congruent Direction, Proceedings of the National Academy of Sciences, doi: 10.1073/pnas.1506552113

Article is open-access at : http://www.pnas.org/content/early/2016/01/05/1506552113

See our press-release: http://www.eurekalert.org/pub_releases/2016-01/lu-twy011116.php

A piece in Science Magazine reviewing the work: http://news.sciencemag.org/brain-behavior/2016/01/how-change-your-mood-just-listening-sound-your-voice

Download the emotional transformation software at: http://cream.ircam.fr/?p=44

Read More