L’équipe CREAM recherche un étudiant francophone pour un stage de recherche niveau M2, sur l’influence d’une manipulation acoustique de la voix sur l’anxiété pré-opératoire.

Période: Mars à Juillet 2019

Encadrants : Gilles Guerrier (Médecin Anesthésie-réanimation chirurgicale, Hôpital Cochin, APHP, Paris) & Jean-Julien Aucouturier (Chargé de recherche CNRS, IRCAM, Paris)

Contexte : Le stage est proposé dans le cadre d’une étude clinique en collaboration entre l’Hôpital Cochin et l’Institut de Recherche et Coordination en Acoustique/Musique (IRCAM) à Paris. Le stage est financé par le projet ANR REFLETS (“Rétroaction Faciales et Linguistiques et Etats de Stress Traumatiques”), qui vise à étudier l’impact de la voix sur les émotions.

Description du projet : L’anxiété pré-opératoire est un phénomène connu depuis longtemps chez les patients qui vont bénéficier d’une chirurgie [Corman Am J Surg 1958]. L’anxiété a un impact sur le niveau et la qualité de rétention d’informations et de consignes fournies par les différents interlocuteurs (chirurgien, anesthésiste, personnel paramédical et

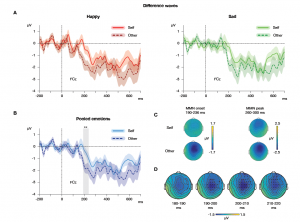

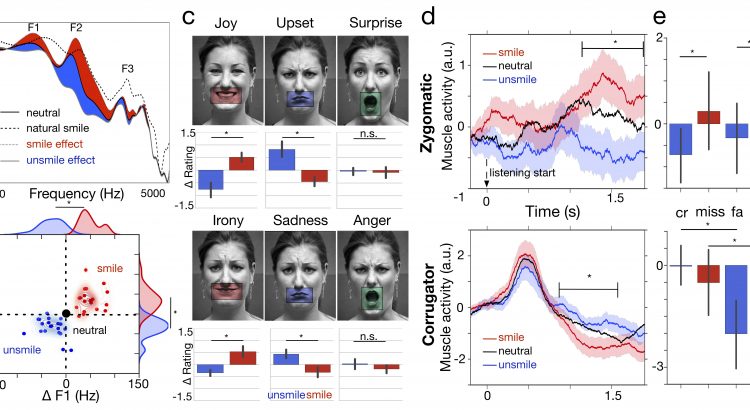

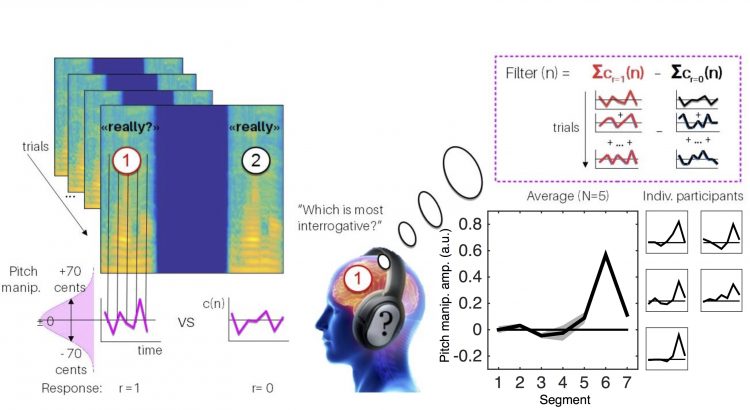

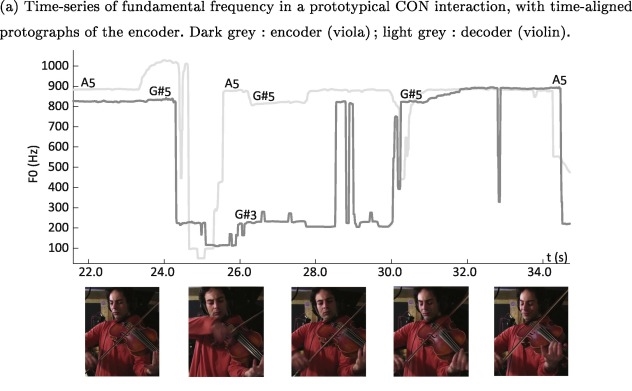

administratif) [Johnston Ann Behav Med 1993]. Les moyens de limiter cette anxiété de façon non médicamenteuse sont actuellement peu nombreux. Nos travaux récents ont montré qu’il est possible de transformer le son d’une voix parlée en temps-réel, au fur et à mesure d’une conversation, pour lui donner des caractéristiques émotionnelles [Arias IEEE Trans. Aff. Comp. 2018]. Sur des volontaires sains, nous avons montré qu’une voix transformée pour être plus souriante a un impact émotionnel positif sur l’interlocuteur [Arias Cur. Biol. 2018]. Nous proposons d’évaluer l’impact de ce dispositif de modulation de la voix humaine sur la qualité de la prise en charge de la population des patients anxieux avant chirurgie.

Rôle de l’étudiant.e dans le projet : Le/la stagiaire participera en premier lieu à l’inclusion des participants dans le service de chirurgie ambulatoire de l’Hopital Cochin, sous la supervision du Dr. Gilles Guerrier. Sa responsabilité sera d’expliquer le protocole aux participants, de recueillir leur consentement, d’installer l’équipement nécessaire (casque, micro), puis de veiller au bon recueil et enregistrement des données avant et après l’intervention. En second lieu, le/la stagiaire participera à l’analyse des données collectées, leur interprétation et la rédaction d’un rapport ou d’un article scientifique.

Profil recherché : Nous cherchons pour ce stage un.e étudiant.e ayant une formation médicale ou paramédicale (ex. ARC, orthophonie), ou une formation de sciences cognitives expérimentales avec une orientation de recherche clinique et d’innovation pour la santé. La personne idéale pour ce stage aura une expérience et un intérêt pour interagir avec des patients en milieu hospitalier, une bonne connaissance des procédures liées aux essais cliniques (randomisation, consentement, etc.) et d’excellentes capacités à gérer un emploi du temps d’inclusion et de suivi de patients et à assurer la tracabilité des données enregistrées avec l’outil informatique. Une familiarité avec l’enregistrement sonore, ou la voix humaine, seront un plus.

Conditions :

Le stage fera l’objet d’une convention tri-partite entre l’étudiant.e, l’établissement de formation du M2, et l’IRCAM. Le stage sera rémunéré au montant forfaitaire, d’environ 500e par mois.

Comment candidater : Envoyer un CV et une lettre de motivation répondant aux points recherchés ci-dessus à Gilles Guerrier guerriergilles@gmail.com & Jean-Julien AUCOUTURIER, aucouturier@gmail.com