At the occasion of the PhD defense of Pablo Arias on Dec. 18th, the CREAM lab is happy to organize a mini-symposium on recent results on the evolution and universality of vocal and facial expressions with two prominent researchers from the field, Dr. Rachael Jack (School of Psychology, University of Glasgow) and Prof. Tecumseh Fitch (Department of Cognitive Biology, University of Vienna). The two talks will be followed in the afternoon by the PhD viva of Pablo Arias on “auditory smiles”, which is also public.

Date: Tuesday December 18th

Hours: 10h30-12h (symposium), 14h (PhD. viva)

Place: Salle Stravinsky, Institut de Recherche et Coordination en Acoustique/Musique (IRCAM), 1 Place Stravinsky 75004 Paris. [access]

Tuesday Dec. 18th, 10h30-12h

Symposium: The evolution of facial and vocal expressions (Dr. Rachael Jack, Prof. Tecumseh Fitch)

10h30-11H15 – Dr. Rachael Jack (University of Glasgow, UK)

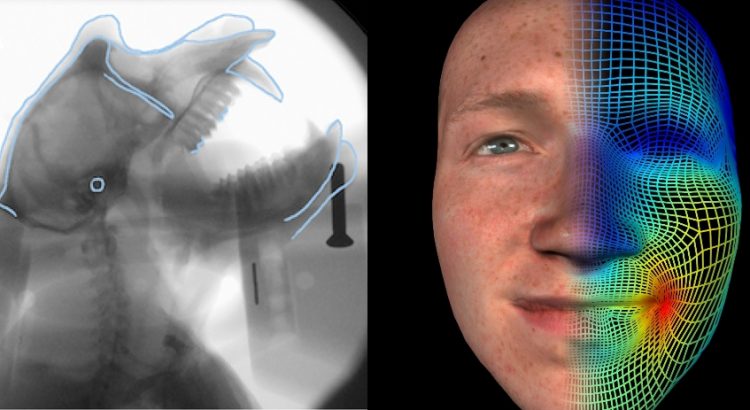

Modelling Dynamic Facial Expressions Across Cultures

Facial expressions are one of the most powerful tools for human social communication. However, understanding facial expression communication is challenging due to their sheer number and complexity. Here, I present a program of work designed to address this challenge using a combination of social and cultural psychology, vision science, data-driven psychophysical methods, mathematical psychology, and 3D dynamic computer graphics. Across several studies, I will present work that precisely characterizes how facial expressions of emotion are signaled and decoded within and across cultures, and shows that cross-cultural emotion communication comprises four, not six, main categories. I will also highlight how this work has the potential to inform the design of socially and culturally intelligent robots.

11h15-12h – Prof. Tecumseh Fitch (University of Vienna, Austria)

The evolution of voice formant perception

Abstract t.b.a.

Tuesday Dec. 18th, 14h-16h30

PhD Defense: Auditory smiles (Pablo Arias)

At 14h on the same day, Pablo Arias (PhD candidate, Sorbonne-Université) will defend his PhD thesis, conducted in the CREAM Lab/ Perception and Sound Design Team (STMS – IRCAM/CNRS/Sorbonne Université). The viva is public, and all are welcome.

14h-16h30 – M. Pablo Arias (IRCAM, CNRS, Sorbonne Université)

The cognition of auditory smiles: a computational approach

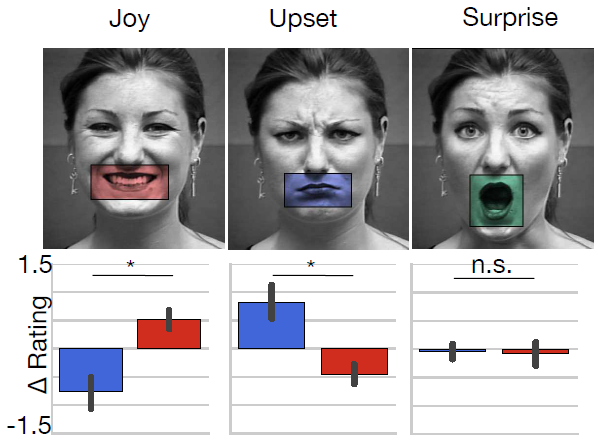

Emotions are the fuel of human survival and social development. Not only do we undergo primitive reflexes mediated by ancient brain structures, but we also consciously and unconsciously regulate our emotions in social contexts, affiliating with friends and distancing from foes. One of our main tools for emotion regulation is facial expression and, in particular, smiles. Smiles are deeply grounded in human behavior: they develop early, and are used across cultures to communicate affective states. The mechanisms that underlie their cognitive processing include interactions not only with visual, but also emotional and motor systems. Smiles, trigger facial imitation in their observers, reactions thought to be a key component of the human capacity for empathy. Smiles, however, are not only experienced visually, but also have audible consequences. Although visual smiles have been widely studied, almost nothing is known about the cognitive processing of their auditory counterpart.

This is the aim of this dissertation. In this work, we characterise and model the smile acoustic fingerprint, and use it to probe how auditory smiles are processed cognitively. We give here evidence that (1) auditory smiles can trigger unconscious facial imitation, that (2) they are cognitively integrated with their visual counterparts during perception, and that (3) the development of these processes does not depend on pre-learned visual associations. We conclude that the embodied mechanisms associated to the visual processing of facial expressions of emotions are in fact equally found in the auditory modality, and that their cognitive development is at least partially independent from visual experience.

Download link: Thesis manuscript

Thesis Committee:

- Prof. Tecumseh Fitch – Reviewer – Department of Cognitive Biology, University of Vienna

- Dr. Rachael Jack – Reviewer – School of Psychology, University of Glasgow

- Prof. Julie Grèzes – Examiner – Département d’Etudes Cognitives, Ecole Normale Supérieure, Paris

- Prof. Catherine Pelachaud – Examiner – Institut des Systèmes Intelligents et de Robotique, Sorbonne Université/CNRS, Paris.

- Prof. Martine Gavaret – Examiner – Service de Neurophysiologie, Groupement Hospitalier Saint-Anne, Paris.

- Dr. Patrick Susini – Thesis Director – STMS, IRCAM/CNRS/Sorbonne Université, Paris

- Dr. Pascal Belin – Thesis Co-director – Institut des Neurosciences de la Timone, Aix-Marseille Université.

- Dr. Jean-Julien Aucouturier – Thesis Co-director – STMS, Ircam/CNRS/Sorbonne Université, Paris