Exciting news: Laura, Pablo, Emmanuel and JJ from the lab will be “touring” (the academic version thereof, at least) Japan this coming week, with two events planned in Tokyo:

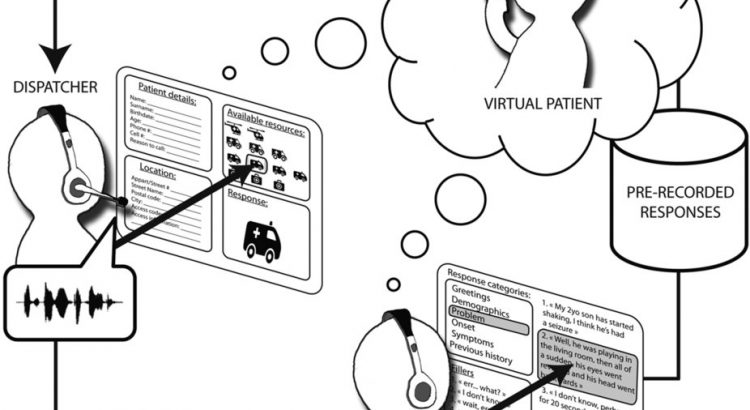

- We’ll present our voice transformation software DAVID and our related research on emotional vocal feedback at the “Europa Science House” of the Science Agora event at Miraikan – Dates: Thursday 3 – Sunday 6 Nov, 10:00-17:00 / Venue: Miraikan 1F Booth Aa. This is at the kind invitation of the European Union’s Delegation in Japan.

- We co-organize a public workshop on Music cognition, emotion and audio technology with our friend Tomoya Nakai from University of Tokyo (he did all the organizing work, really), on Monday November 7th. This is hosted by Kazuo Okanoya’s laboratory.

If you’re around, and want to chat, please drop us a line. 「日本にきてとてもうれしい!!」